Whether you are new to computers or have been around them for years, understanding their architecture can greatly enhance our appreciation of how they function. Everything around us has its own architecture: humans, vehicles, nature, and even the buildings we admire. Computers are no different, and their architecture defines how they operate. Just like in the show How It’s Made, when we learn about how something works, we develop a greater appreciation and a deeper connection to its functionality.

In this article, I will introduce you to two foundational computer architectures: the Von Neumann Architecture and the Harvard Architecture.

Von Neumann Architecture: A Brief Overview

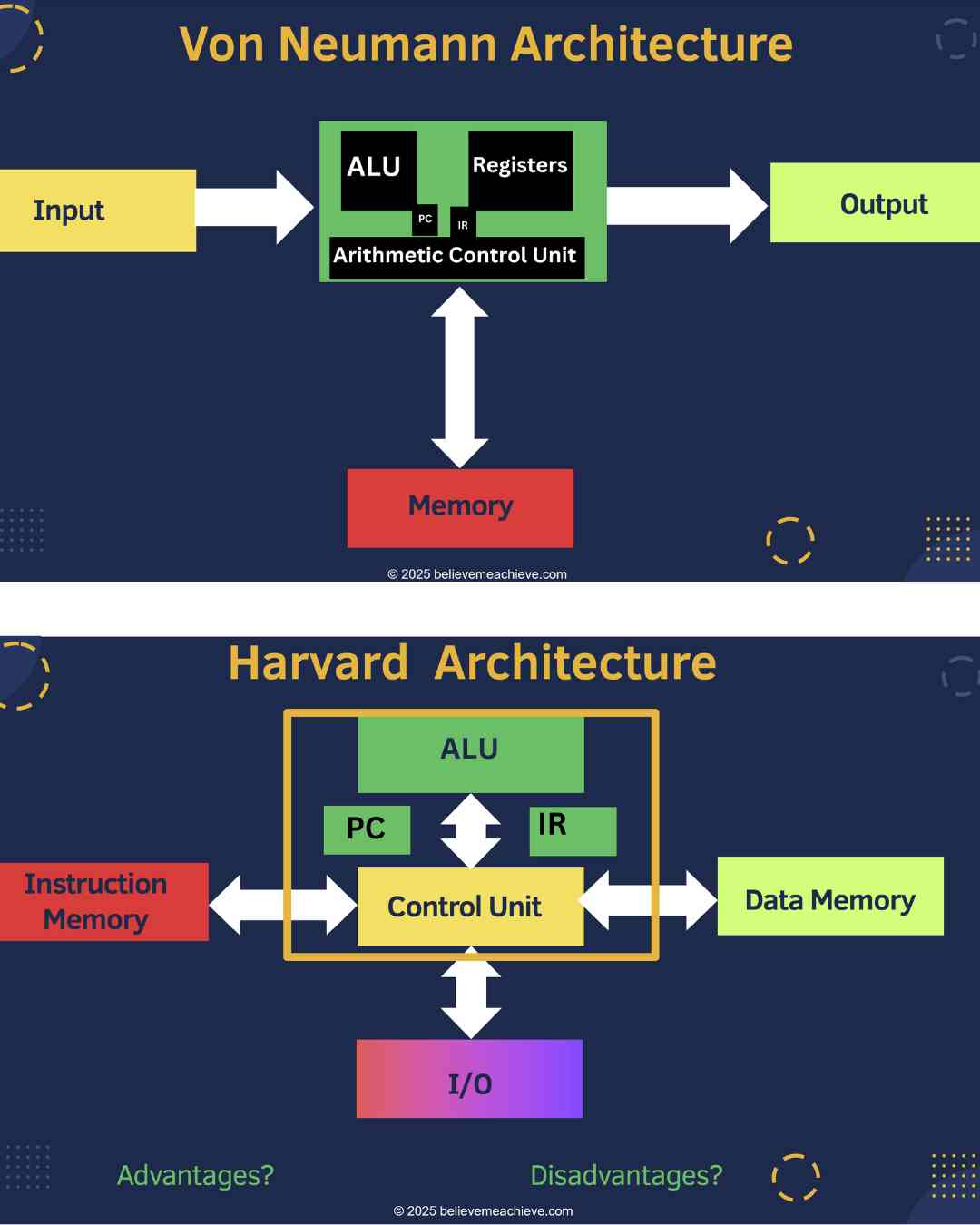

John Von Neumann, a mathematician, physicist, computer scientist, and engineer, along with other luminaries like Alan Turing and Claude Shannon, was one of the original pioneers behind the stored-program digital computer. In 1945, he also introduced the Von Neumann Architecture, which includes a processor with a control unit, an ALU (Arithmetic Logic Unit), registers, memory, and input/output systems.

To help you grasp this, I’ve come up with a fun acronym: Friends Don’t Let Enthusiasm Spiral. Let’s break down this process:

-

Fetch: The first step involves the ALU retrieving an instruction from memory via three buses. The address bus sends the memory address to locate the instruction, while the control bus provides signals (like a read clock), and the data bus carries the instructions to the processor.

-

Decode: This step converts the instruction into control signals, which direct components such as the ALU, Control Unit, and I/O devices. The ALU is essentially the brain of the computer, managing data flow and telling components what to do.

-

Load: Here, operands or data are loaded into the system. This is optional and depends on the instruction. The data is sent over the data bus, and importantly, both instructions and data are stored in the same memory space and accessed via the same data bus.

-

Execute: The control unit activates the ALU to execute the instruction. The ALU performs arithmetic or logic operations (like addition, subtraction, AND, OR, etc.) if the instruction calls for it.

-

Store: The final step is storing the result back into memory. This is also optional, as not all instructions require it. The address and control signals are sent through the buses, and the data is stored in memory.

Because accessing main memory is time-consuming, the CPU uses onboard registers for quick access. Some of these registers are available to programmers for temporary data storage, while others assist in the instruction cycle.

Important Registers:

However, the Von Neumann Architecture faces a limitation known as the "Von Neumann Bottleneck." This happens because both data and instructions must pass through the same bus, leading to slowdowns. Even with the fastest CPU, much of its time is spent waiting for instructions to arrive.

The Harvard Architecture: A Solution to the Bottleneck

The Harvard Architecture solves this problem by implementing two separate buses—one for instructions and another for data. Not only do these buses run concurrently, but they also connect to separate memory spaces. This means that data and instructions can be accessed at the same time, reducing delays. Moreover, since each memory space is separate, the instruction and data memories don't need to be the same size.

By having separate pathways for data and instructions, Harvard Architecture provides greater speed and efficiency, sidestepping